Hi there, and welcome. This content is still relevant,

but fairly old. If you are interested in keeping up-to-date with similar

articles on profiling, performance testing, and writing performant

code, consider signing up to the Four Steps to Faster Software newsletter. Thanks!

For the next instalment of this series on low-latency tuning at LMAX Exchange, I'm going to talk about reducing jitter introduced by the operating system.

Our applications typically execute many threads, running within a JVM, which in turns runs atop the Linux operating system. Linux is a general-purpose multi-tasking OS, which can target phones, tablets, laptops, desktops and server-class machines. Due to this broad reach, it can sometimes be necessary to supply some guidance in order to achieve the lowest latency.

LMAX Exchange services rely heavily on the Disruptor for fast inter-thread communication, and as such, we have a handful of 'hot' threads that we wish to always be on-CPU.

Below is a simplified diagram of one of the low-latency paths through our exchange. We receive FIX requests from customers at a gateway, these requests are multiplexed into a Disruptor instance, where the consumer thread sends messages onto a 10Gb network via UDP. Those messages then arrive at the matching engine, where they are processed before a response is sent out to the customer via the FIX gateway.

Focussing on the (simplified) matching engine, we can see that there are 4 threads of execution that will affect end-to-end latency if there is jitter present in the system (in reality, there are more, this diagram is for illustrative purposes only!).

To ensure data consistency, the business-logic thread will not process a message until it has been written to the journal (this is covered in more detail in previous posts). So it can be seen that jitter experienced on any of these threads will affect the end-to-end latency experienced by customers.

Enter the dark arts of CPU-isolation, thread-pinning and Linux tracing tools.

== Accumulator Message Transit Latency (ns) ==

mean 60879

min 76

50.00% 168

90.00% 256

99.00% 2228239

99.90% 8126495

99.99% 10485823

99.999% 11534399

99.9999% 11534399

max 11534399

count 3595101

Another possible cause of scheduling jitter is likely to be down to the OS scheduler moving processes around as different tasks become runnable. The important threads in the application are at the mercy of the scheduler, which can, when invoked decide to run another process on the current CPU. When this happens, the running thread's context will be saved, and it will be shifted back into the schedulers run-queue (or possibly migrated to another CPU entirely).

To find out whether this is happening to the threads in our application, we can turn to perf again and sample trace events emitted by the scheduler. In this case, sampling the sched_stat_runtime event will show what CPU has been playing host to the application threads.

One row of output from perf script shows that the java thread executed on CPU1 for a duration of 1.000825 milliseconds:

java 11372 [001] 3055.140623: sched:sched_stat_runtime: comm=java pid=11372 runtime=1000825 [ns] vruntime=81510486145 [ns]

A bit of sorting and counting will show exactly how the process was moved around the available CPUs during its lifetime:

perf script | grep "java 11372" | awk '{print $3}' | sort | uniq -c

...

16071 [000]

10858 [001]

5778 [002]

7230 [003]

So this thread mostly ran on CPUs 0 and 1, but also spent some time on CPUs 2 and 3. Moving the process around is going to require a context switch, and produce cache invalidation effects. While these are unlikely to be the sources of maximum latency, in order to start improving the worst-case, it will be necessary to stop migration of these processes.

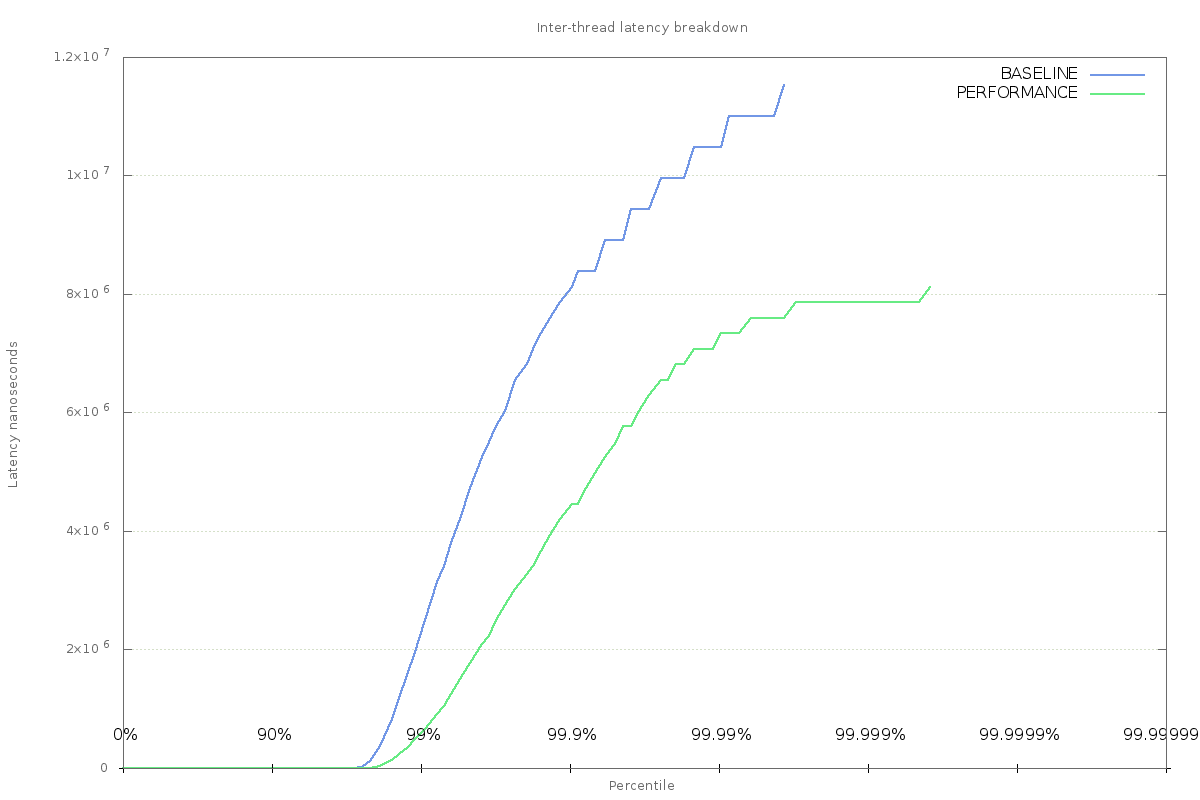

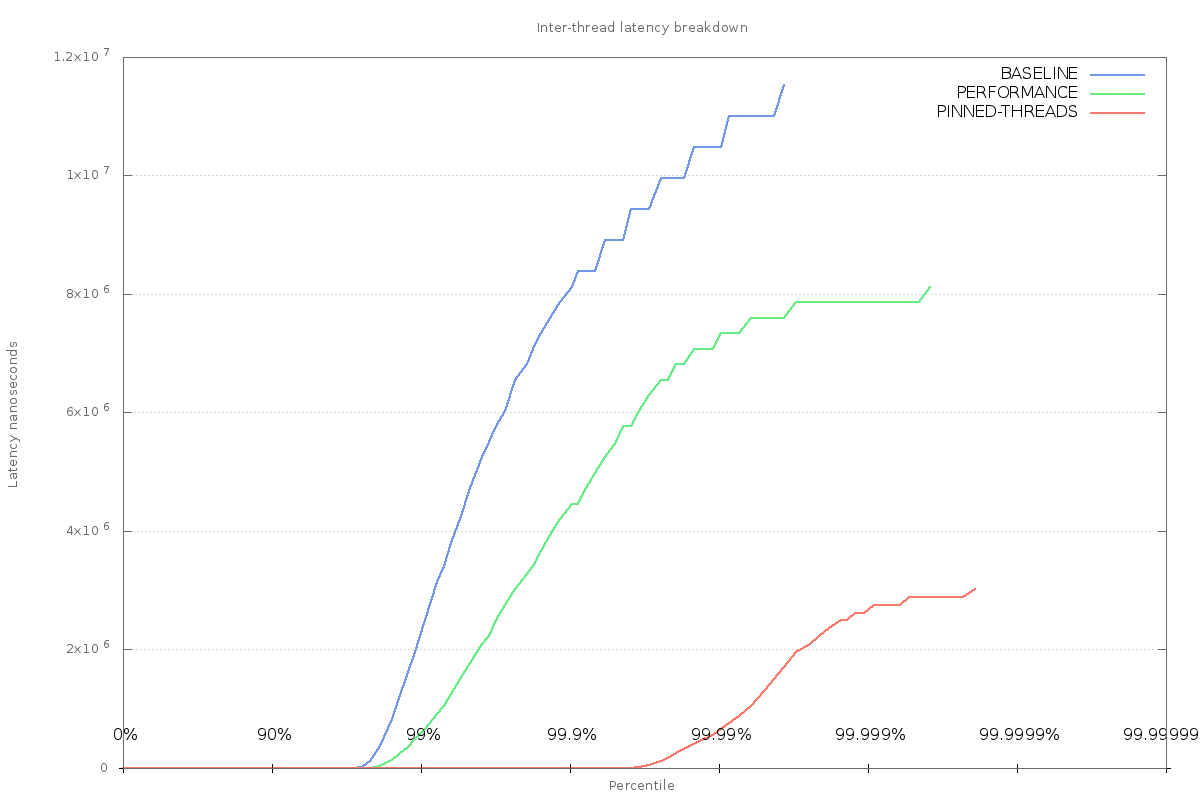

Thread affinity can be used to force processes to run on a specific CPU or set of CPUs. This achieved by either using taskset when launching a program, or the sched_setaffinity system call from within an application. Using this technique to stop process migration of latency-sensitive threads has a positive effect on the latency jitter experienced in the application:

This result implies that forcing the threads to run on a single CPU can help reduce inter-thread latency. Whether this is down to the scheduler making better decisions about where to run other processes, or simply because there is less context switching is not clear.

One thing to look out for is the fact that no effort has been made to stop the scheduler from running other tasks on those CPUs. There are still multi-millisecond delays in message passing, and this could be down to other processes being run on the CPU that the application thread has been restricted to.

Returning to perf and this time capturing all sched_stat_runtime events for a specific CPU (in this case 1) will show what other processes are being scheduled while the application is running:

perf record -e "sched:sched_stat_runtime" -C 1

Stripping out everything but the process name, and counting occurrences in the event trace shows that while the java application was running most of the time, there are plenty of other processes that were scheduled during the application's execution time:

45514 java

60 kworker/1:2

26 irq/39-DLL0665:

24 rngd

15 rcu_sched

9 gmain

8 goa-daemon

7 chrome

6 ksoftirqd/1

5 rtkit-daemon

At this point, it's time to remove the target CPUs from the OS's scheduling domain. This can be done with the isolcpus boot parameter (i.e. add isolcpus=<cpu-list> to grub.conf), or by using the cset command from the cpuset package.

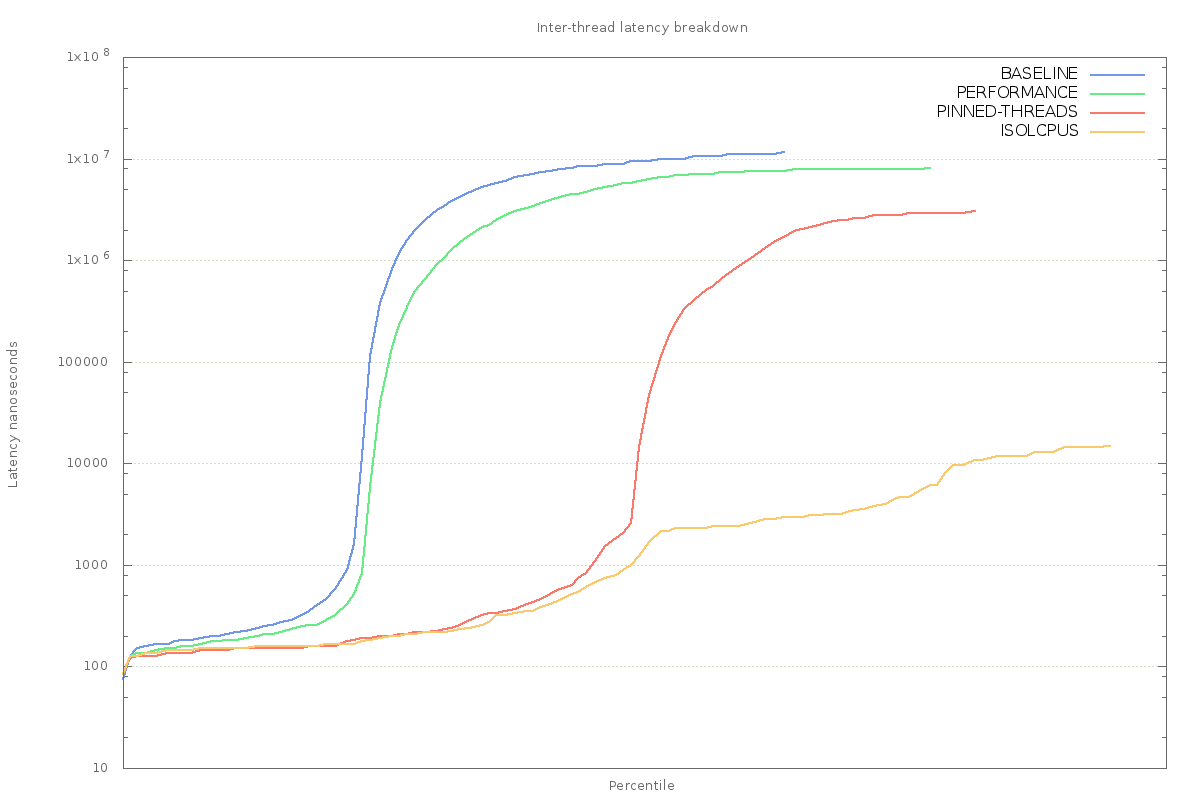

Using this method, the scheduler is restricted from running other user-space processes on the CPUs hosting the latency-sensitive application threads. In combination with setting the thread affinity, this should mean that the application threads will always have CPU resource and will be effectively always running.

The difference in inter-thread latency is dramatic - maximum latency is down to 14 microseconds:

== Accumulator Message Transit Latency (ns) ==

mean 144

min 84

50.00% 144

90.00% 160

99.00% 208

99.90% 512

99.99% 2432

99.999% 3584

99.9999% 11776

max 14848

count 3595101

The difference is so great, that it's necessary to use a log-scale for the y-axis of the chart.

Note that the difference will not be so great on a server-class machine with lots of spare processing power. The effect here is magnified by the fact that the OS only has 4 CPUs (on my laptop) to work with, and a desktop distribution of Linux. So there is much more scheduling pressure than would be present on a server-class machine.

Using perf once again to confirm that other processes are not running on the reserved CPUs shows that there is still some contention to deal with:

81130 java

2 ksoftirqd/1

43 kworker/1:0

1 kworker/1:1H

2 kworker/3:1

1 kworker/3:1H

11 swapper

These processes starting with 'k' are kernel threads that deal with house-keeping tasks on behalf of the OS, 'swapper' is the Linux idle process, which is scheduled whenever there is no work to be executed on a CPU.

This post describes the start of the journey towards tuning Linux for low-latency applications taken at LMAX Exchange. Dealing with other causes of runtime jitter are covered in the follow-up post.

Follow @epickrram

Our applications typically execute many threads, running within a JVM, which in turns runs atop the Linux operating system. Linux is a general-purpose multi-tasking OS, which can target phones, tablets, laptops, desktops and server-class machines. Due to this broad reach, it can sometimes be necessary to supply some guidance in order to achieve the lowest latency.

LMAX Exchange services rely heavily on the Disruptor for fast inter-thread communication, and as such, we have a handful of 'hot' threads that we wish to always be on-CPU.

Below is a simplified diagram of one of the low-latency paths through our exchange. We receive FIX requests from customers at a gateway, these requests are multiplexed into a Disruptor instance, where the consumer thread sends messages onto a 10Gb network via UDP. Those messages then arrive at the matching engine, where they are processed before a response is sent out to the customer via the FIX gateway.

Focussing on the (simplified) matching engine, we can see that there are 4 threads of execution that will affect end-to-end latency if there is jitter present in the system (in reality, there are more, this diagram is for illustrative purposes only!).

- The thread which is polling the network for inbound traffic

- The thread that executes business logic, generating responses

- The journaller thread, which writes all in-bound messages to disk

- The publisher thread, responsible for sending responses back to the gateway

To ensure data consistency, the business-logic thread will not process a message until it has been written to the journal (this is covered in more detail in previous posts). So it can be seen that jitter experienced on any of these threads will affect the end-to-end latency experienced by customers.

The aim is to reduce that jitter to an absolute minimum. To do this, we use the Disruptor's BusySpinWaitStrategy so that message-passing between publisher and consumer is as 'instantaneous' as allowed by the platform. The Disruptor has different strategies for waiting, and each is suited to different situations. In this case, since we want to reduce latency, busy-spinning is the best choice. It does however, come with caveats.

If these threads are to be always runnable, then they need to have access to CPU resource at all times. As mentioned before, Linux is a multi-tasking general-purpose operating system, whose default mode is to schedule a wide variety of tasks with different latency requirements. If the operating system decides to run another task on the CPU currently executing one of the busy-spinning threads, then unwanted and unpredictable latency will creep into the system.

Enter the dark arts of CPU-isolation, thread-pinning and Linux tracing tools.

An example

In order to demonstrate the techniques we used at LMAX Exchange to achieve consistent, low inter-thread latency, I'm going to refer to an example application that can be used to measure such latencies introduced by the host platform.

The application has three threads with low-latency requirements. A 'producer' thread, which reads messages from a datasource, and writes them to a Disruptor instance, a 'logic' thread, which performs some arbitrary logic, and a 'journaller' thread, which writes messages to disk. Both logic and journaller threads are consumers of the Disruptor instance, using a busy-spin wait strategy.

The producer thread performs a call to System.nanoTime() and writes the result into the message before passing to the Disruptor. The logic thread reads a message from the Disruptor, and immediately calls System.nanoTime(). The delta between these two timestamps is the time taken to transit the Disruptor. These deltas are stored in an HdrHistogram and reported at application exit.

Given that very little work is being done by the logic thread, it is reasonable to expect that inter-thread latency will be low, and consistent. In reality however, this is not the case.

I'm running these tests on my 4-CPU laptop, so operating system scheduling jitter is magnified; it would be less pronounced on a 64-CPU server-class machine, for instance, but the techniques used to investigate and reduce jitter are effectively the same.

System-jitter baseline

Executing the example application for a period of time and inspecting the results shows that there is a large variation in the time taken to transit the Disruptor:

== Accumulator Message Transit Latency (ns) ==

mean 60879

min 76

50.00% 168

90.00% 256

99.00% 2228239

99.90% 8126495

99.99% 10485823

99.999% 11534399

99.9999% 11534399

max 11534399

count 3595101

The fastest message to get through the Disruptor was 76 nanoseconds, but things rapidly degrade from there: 1 in 100 messages took longer than 2 milliseconds to pass between threads. The longest delay was 11 milliseconds - a difference of several orders of magnitude.

Clearly something is happening on the system that is negatively affecting latency. Pauses introduced by the runtime (JVM) can be ruled out, as the application is garbage-free, performs warm-up cycles to exercise the JIT, and guaranteed safepoints are disabled. This can be confirmed by enabling safepoint logging, looking at the GC log and stdout output when -XX:+PrintCompilation is enabled.

Clearly something is happening on the system that is negatively affecting latency. Pauses introduced by the runtime (JVM) can be ruled out, as the application is garbage-free, performs warm-up cycles to exercise the JIT, and guaranteed safepoints are disabled. This can be confirmed by enabling safepoint logging, looking at the GC log and stdout output when -XX:+PrintCompilation is enabled.

CPU Speed

Modern CPUs (especially on laptops) are designed to be power efficient, this means that the OS will typically try to scale down the clock rate when there is no activity. On Intel CPUs, this is partially handled using power-states, which allow the OS to reduce CPU frequency, meaning less power draw, and less thermal overhead.

On current kernels, this is handled by the CPU scaling governor. You can check your current setting by looking in the file

/sys/devices/system/cpu/cpu0/cpufreq/scaling_governor

there is one directory entry in /sys/devices/system/cpu/ per available CPU on the machine. On my laptop, this is set to powersave mode. To see available governors:

cat /sys/devices/system/cpu/cpu0/cpufreq/scaling_available_governors

which tells me that I have two choices:

Before making a change though, let's make sure that powersave is actually causing issues.

To do this, perf_events can be used to monitor the CPU's P-state while the application is running:

perf record -e "power:cpu_frequency" -a

This command will sample the cpu_frequency trace point written to by the intel cpufreq driver on all CPUs. This information comes from an MSR on the chip which holds the FSB speed.

Filtering entries to include only those samples taken when java was executing shows some variation in the reported frequency; clearly not ideal for achieving the lowest latency:

java 2804 [003] 3327.796741: power:cpu_frequency: state=1500000 cpu_id=3

java 2804 [003] 3328.089969: power:cpu_frequency: state=3000000 cpu_id=3

java 2804 [003] 3328.139009: power:cpu_frequency: state=2500000 cpu_id=3

java 2804 [003] 3328.204063: power:cpu_frequency: state=1000000 cpu_id=3

This script can be used to set the scaling governor to performance mode to reduce the variation:

sudo bash ./set_cpu_governor.sh performance

Running the application again with the performance governor enabled produces better results for inter-thread latency. Monitoring with perf shows that the cpu_frequency events are no longer emitted.

== Accumulator Message Transit Latency (ns) ==

mean 23882

min 84

50.00% 152

90.00% 208

99.00% 589827

99.90% 4456479

99.99% 7340063

99.999% 7864351

99.9999% 8126495

max 8126495

count 3595101

Though there is still a max latency of 8ms, it has been reduced from the previous value of 11ms.

On current kernels, this is handled by the CPU scaling governor. You can check your current setting by looking in the file

/sys/devices/system/cpu/cpu0/cpufreq/scaling_governor

there is one directory entry in /sys/devices/system/cpu/ per available CPU on the machine. On my laptop, this is set to powersave mode. To see available governors:

cat /sys/devices/system/cpu/cpu0/cpufreq/scaling_available_governors

which tells me that I have two choices:

- performance

- powersave

Before making a change though, let's make sure that powersave is actually causing issues.

To do this, perf_events can be used to monitor the CPU's P-state while the application is running:

perf record -e "power:cpu_frequency" -a

This command will sample the cpu_frequency trace point written to by the intel cpufreq driver on all CPUs. This information comes from an MSR on the chip which holds the FSB speed.

Filtering entries to include only those samples taken when java was executing shows some variation in the reported frequency; clearly not ideal for achieving the lowest latency:

java 2804 [003] 3327.796741: power:cpu_frequency: state=1500000 cpu_id=3

java 2804 [003] 3328.089969: power:cpu_frequency: state=3000000 cpu_id=3

java 2804 [003] 3328.139009: power:cpu_frequency: state=2500000 cpu_id=3

java 2804 [003] 3328.204063: power:cpu_frequency: state=1000000 cpu_id=3

This script can be used to set the scaling governor to performance mode to reduce the variation:

sudo bash ./set_cpu_governor.sh performance

Running the application again with the performance governor enabled produces better results for inter-thread latency. Monitoring with perf shows that the cpu_frequency events are no longer emitted.

== Accumulator Message Transit Latency (ns) ==

mean 23882

min 84

50.00% 152

90.00% 208

99.00% 589827

99.90% 4456479

99.99% 7340063

99.999% 7864351

99.9999% 8126495

max 8126495

count 3595101

Though there is still a max latency of 8ms, it has been reduced from the previous value of 11ms.

Process migration

...

16071 [000]

10858 [001]

5778 [002]

7230 [003]

So this thread mostly ran on CPUs 0 and 1, but also spent some time on CPUs 2 and 3. Moving the process around is going to require a context switch, and produce cache invalidation effects. While these are unlikely to be the sources of maximum latency, in order to start improving the worst-case, it will be necessary to stop migration of these processes.

Thread affinity

One thing to look out for is the fact that no effort has been made to stop the scheduler from running other tasks on those CPUs. There are still multi-millisecond delays in message passing, and this could be down to other processes being run on the CPU that the application thread has been restricted to.

Returning to perf and this time capturing all sched_stat_runtime events for a specific CPU (in this case 1) will show what other processes are being scheduled while the application is running:

perf record -e "sched:sched_stat_runtime" -C 1

Stripping out everything but the process name, and counting occurrences in the event trace shows that while the java application was running most of the time, there are plenty of other processes that were scheduled during the application's execution time:

45514 java

60 kworker/1:2

26 irq/39-DLL0665:

24 rngd

15 rcu_sched

9 gmain

8 goa-daemon

7 chrome

6 ksoftirqd/1

5 rtkit-daemon

CPU Isolation

Using this method, the scheduler is restricted from running other user-space processes on the CPUs hosting the latency-sensitive application threads. In combination with setting the thread affinity, this should mean that the application threads will always have CPU resource and will be effectively always running.

The difference in inter-thread latency is dramatic - maximum latency is down to 14 microseconds:

== Accumulator Message Transit Latency (ns) ==

mean 144

min 84

50.00% 144

90.00% 160

99.00% 208

99.90% 512

99.99% 2432

99.999% 3584

99.9999% 11776

max 14848

count 3595101

The difference is so great, that it's necessary to use a log-scale for the y-axis of the chart.

Note that the difference will not be so great on a server-class machine with lots of spare processing power. The effect here is magnified by the fact that the OS only has 4 CPUs (on my laptop) to work with, and a desktop distribution of Linux. So there is much more scheduling pressure than would be present on a server-class machine.

Using perf once again to confirm that other processes are not running on the reserved CPUs shows that there is still some contention to deal with:

81130 java

2 ksoftirqd/1

43 kworker/1:0

1 kworker/1:1H

2 kworker/3:1

1 kworker/3:1H

11 swapper

These processes starting with 'k' are kernel threads that deal with house-keeping tasks on behalf of the OS, 'swapper' is the Linux idle process, which is scheduled whenever there is no work to be executed on a CPU.

Conclusion

CPU isolation and thread affinity are powerful tools that can help reduce runtime jitter introduced by the OS scheduler. Linux tracing tools such as perf_events are invaluable for inspecting the state of running processes when determining sources of jitter. For low-latency applications, orders-of-magnitude reductions in jitter can be achieved by applying these techniques.

This post has introduced some techniques for observing and fixing system jitter. Examples in the post were generated using the application available in this github repository, where there is also a walk-through of the steps used to generate the data for this post.

This post has introduced some techniques for observing and fixing system jitter. Examples in the post were generated using the application available in this github repository, where there is also a walk-through of the steps used to generate the data for this post.

There's more..

This post describes the start of the journey towards tuning Linux for low-latency applications taken at LMAX Exchange. Dealing with other causes of runtime jitter are covered in the follow-up post.

Follow @epickrram